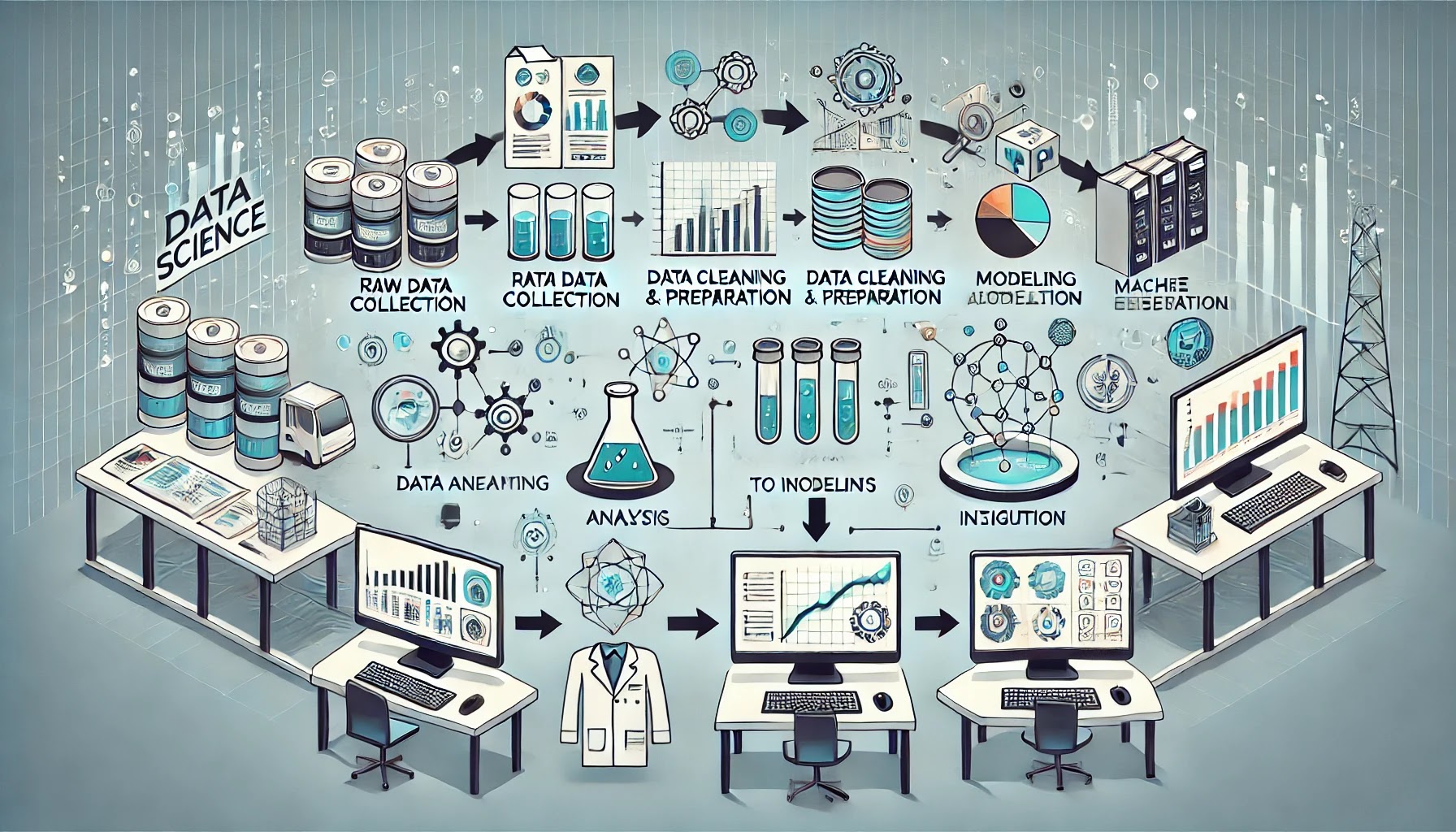

The Data Science Workflow: From Raw Data to Insights

Data science is the art of transforming raw, unstructured data into actionable insights that drive decisions and innovation. While the field is vast, every successful data science project follows a structured workflow. This workflow ensures that data is properly handled, analyzed, and interpreted to meet the project's objectives. In this blog, we’ll explore the essential steps of the data science workflow, offering a roadmap for turning raw data into valuable insights.

Step 1: Understanding the Problem

The journey begins with a clear definition of the problem. Before diving into the data, it’s crucial to ask:

- What are we trying to achieve?

- Who are the stakeholders?

- What decisions will the insights support?

Defining the goals and objectives ensures that the analysis stays focused and relevant. For example, an e-commerce company might want to predict customer churn to improve retention strategies.

Step 2: Data Collection

Once the problem is defined, the next step is to gather relevant data. Data can come from a variety of sources, such as:

- Databases: Internal company records or SQL databases.

- APIs* External data sources like weather or social media data.

- Web Scraping: Collecting data from websites.

- Sensors and IoT Devices: Real-time data from connected devices.

Ensuring the data is complete, relevant, and up-to-date is critical at this stage.

Step 3: Data Cleaning

Raw data is rarely ready for analysis. It often contains errors, inconsistencies, or missing values. Data Science Training In Jaipur cleaning, also known as data preprocessing, involves:

- Handling Missing Values: Filling gaps with averages or other imputation techniques.

- Removing Duplicates: Ensuring data entries are unique.

- Correcting Errors: Fixing typos or inconsistent formatting.

- Normalizing Data: Converting data into a standard format.

Clean data is the foundation of trustworthy insights. Skipping this step can lead to misleading or inaccurate results.

Step 4: Exploratory Data Analysis (EDA)

EDA is the process of examining the cleaned data to understand its structure and patterns. It involves:

- Descriptive Statistics: Summarizing data with metrics like mean, median, and standard deviation.

- Data Visualization: Creating plots (e.g., histograms, scatter plots) to identify trends and outliers.

- Correlation Analysis: Examining relationships between variables.

EDA helps uncover hidden patterns, guiding the selection of appropriate models and techniques for deeper analysis.

Step 5: Feature Engineering

In this step, relevant features (variables) are created or transformed to improve the performance of predictive models. Key activities include:

- Feature Selection: Identifying the most relevant variables for analysis.

- Feature Transformation: Scaling or normalizing data to align with model requirements.

- Feature Creation: Generating new variables based on domain knowledge (e.g., combining "age" and "income" to create an "affordability index").

Good feature engineering often determines the success of a model.

Step 6: Model Building

With the data prepared, the next step is building predictive or descriptive models. This involves:

- Choosing Algorithms: Selecting methods like linear regression, decision trees, or neural networks based on the problem type (classification, regression, clustering, etc.).

- Training the Model: Feeding data into the algorithm to enable it to learn patterns.

- Evaluating Performance: Using metrics like accuracy, precision, recall, or RMSE to assess the model.

The goal is to create a model that generalizes well to unseen data.

Step 7: Data Visualization and Interpretation

The best insights are those that can be effectively communicated. Data visualization tools like Tableau, Power BI, or Matplotlib are used to create intuitive charts, graphs, and dashboards.

- Dashboards: Offer real-time insights for stakeholders.

- Storytelling: Use visuals to narrate the findings and connect them to business goals.

Clear communication ensures stakeholders understand the results and can act on them.

Step 8: Deployment and Monitoring

The final step is putting the model or insights into action. This might involve:

- Deploying a Model: Integrating it into a production environment (e.g., a recommendation system for an e-commerce site).

- Sharing Reports: Delivering findings to stakeholders for strategic decision-making.

- Monitoring and Updating: Ensuring the model continues to perform as expected and adapting it as data evolves.

Conclusion

The data science workflow is a structured yet flexible process that transforms raw data into actionable insights. From understanding the problem to deploying a solution, each step plays a critical role in achieving meaningful outcomes. By following this workflow, data scientists can unlock the full potential of data, driving innovation and enabling smarter decision-making across industries.

Comments

Post a Comment